This list is no longer kept up to-date. Please reach out to request slides from any talk I have given recently.

|

Beyond Accuracy

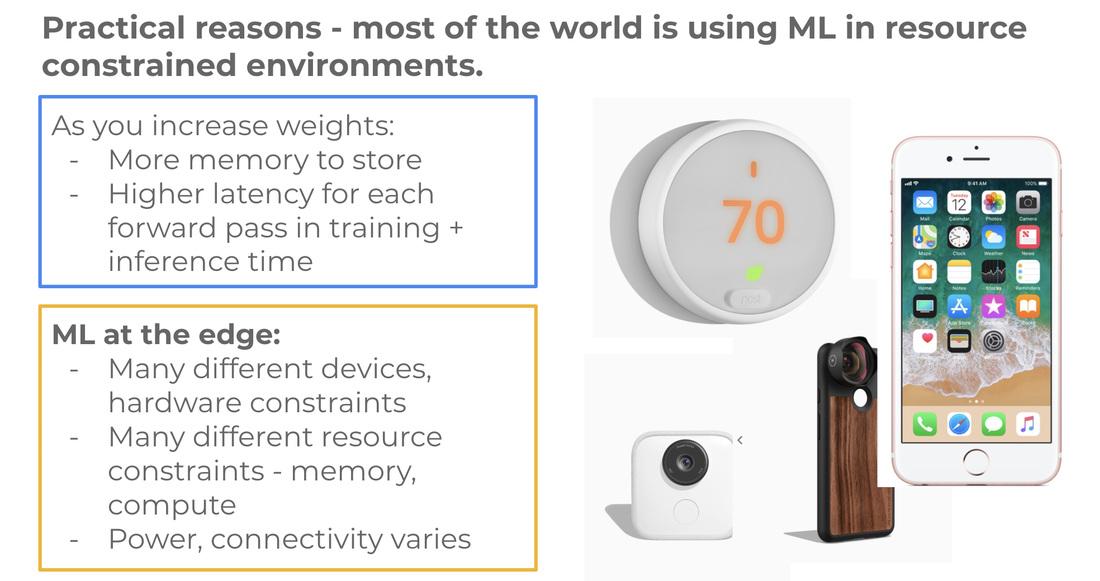

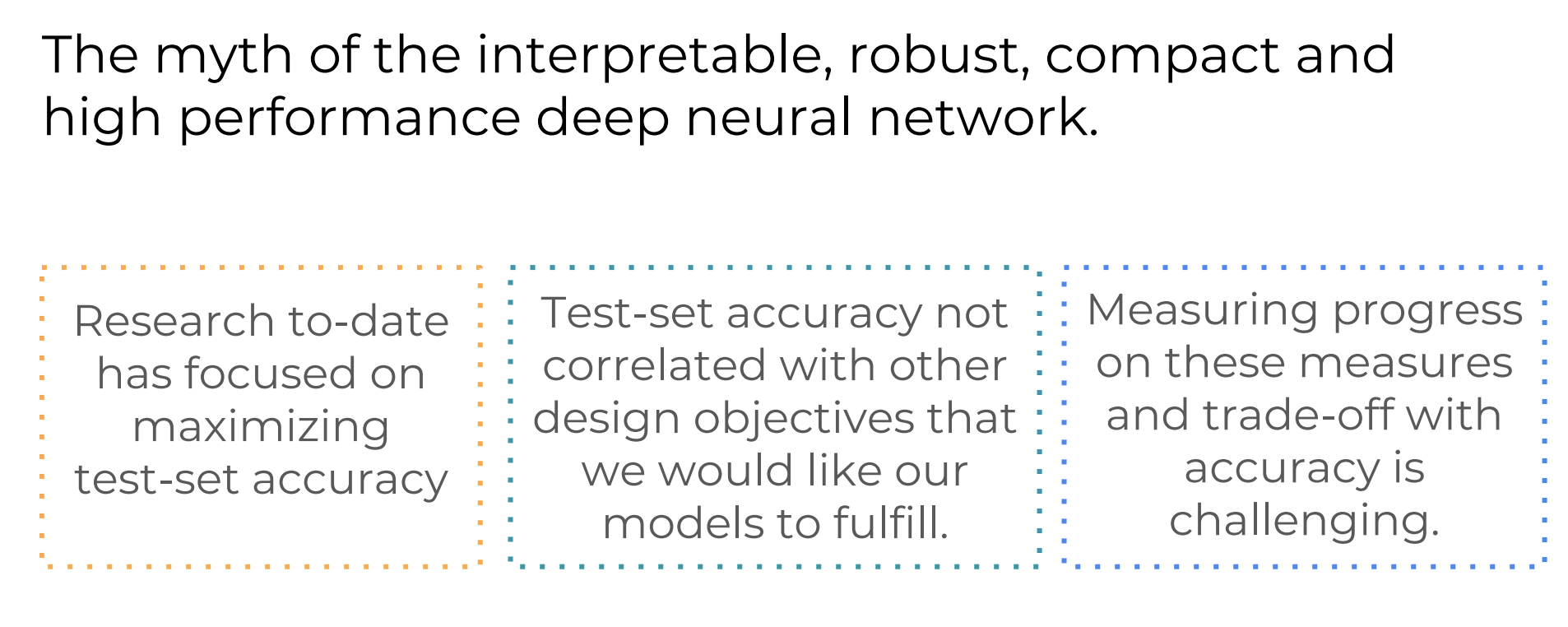

Re-work Toronto 2018 Deep neural networks have made a spectacular leap from research to the applied domain. The use of convolutional neural networks has allowed for large gains in test-set accuracy on image classification tasks. However, there is consensus that test-set accuracy is an inadequate measure of other properties we may care about like adversarial robustness, fairness, model compression or interpretability of a model. In this talk, we will consider some of the challenges associated with measuring progress on training model functions that fulfill multiple desirable characteristics. Slides here, video here. |

|

ROAR: RemOve And Retrain

ICML 2018 Estimating the influence of a given feature to a model prediction is challenging. We introduce ROAR, RemOve And Retrain, a benchmark to evaluate the accuracy of interpretability methods that estimate input feature importance in deep neural networks. This evaluation produces thought-provoking results -- we find that several estimators are less accurate than a random assignment of feature importance. Slides here. |

|

Beyond accuracy: the desire for interpretability and the related challenges.

October 2018 Slides here. |

|

Explaining deep Neural Network Model Predictions

PyBay, San Francisco How can we explain how deep neural networks arrive at decisions? Feature representation is complex and to the human eye opaque; instead a set of interpretability tools intuit what the model has learned by looking at what inputs it pays attention to. This talk will introduce some of the challenges associated with identifying these salient inputs and discuss desirable properties methods should fulfill in order to build trust between humans and algorithm. Slides here, video here. |

|

Engineering Human Vision: An Introduction to Convolutional Neural Networks.

Tutorial at Data Science Africa Summer Machine Learning School. Technical introduction to the convolutional neural networks. Slides here. |

Talk at the Data Institute — October 22nd, 2017

How can we explain how deep neural networks arrive at decisions? Feature representation is complex and to the human eye opaque; instead a set of methods called saliency maps intuit what the model has learned by looking at what inputs it pays attention to. This talk will introduce some of the challenges associated with identifying these salient inputs and discuss desirable properties methods should fulfill in order to build trust between end user and algorithm

How can we explain how deep neural networks arrive at decisions? Feature representation is complex and to the human eye opaque; instead a set of methods called saliency maps intuit what the model has learned by looking at what inputs it pays attention to. This talk will introduce some of the challenges associated with identifying these salient inputs and discuss desirable properties methods should fulfill in order to build trust between end user and algorithm

2017 Data for Good Community Celebration -- Delta's first ever community celebration with USF Data Institute. 5 lightning talks and a poster board session to highlight Delta’s work in 2018.

Slides here. I gave a lightning talk about our efforts to build machine learning capacity around the world.

Slides here. I gave a lightning talk about our efforts to build machine learning capacity around the world.

ML Conf Seattle 2017 — I presented with Steven McPherson on “Stopping Illegal Deforestation Using Deep Learning”

Slides here.

Slides here.

Northwestern Global Engagement Summit 2017 I hosted a workshop with Jonathan Wang on “Storytelling with Data” for nonprofits from around the world. We also both participated as mentors for projects going forward.

Slides here.

Slides here.

AMA Google Brain Ask me anything on reddit. Not a talk, but very fun chance to share experience as a Google AI resident and my research at Brain.

Accelerating mobile learning in Kenya. Slides